You might thing that this statement is a given but you can’t imagine how many enthusiastic new managers enroll in creating and developing new business ideas only based on passions and personal hunches. in no way I’m saying that they are going to fail because doing What you love is a great bonus in a business but a more pragmatic approach would be recommended. Such way of doing things is based on facts, and facts are very hard to be obtained at the beginning of something new. This period is considered to be the most unstable because of all the possible directions and turn-arounds that can be made.

Tag Archives: web scraping

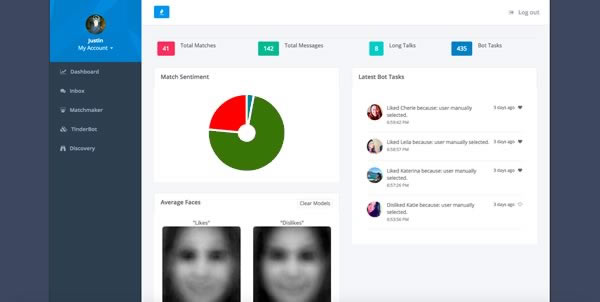

Data science automates love

Tinder App is nothing new for anybody since most of us slowly accepted it in our lives but it also brings some displeasure. For instance, this guy thought that it can automate the process in the way of an app that decides if you’d like a person and start a conversation.

Our year in review

From the very beginning, one of the core services TheWebMiner provided was aggregated data and insight into the mobile app landscape. We managed to offer our clients custom aggregated data for all major mobile app marketplaces (iOS AppStore, Google Play, Amazon AppStore etc.) as well as primary analysis on the extracted data.

Get started with microformats

Microformats are small patterns that can be embedded into your HTML for easier recognition and representation of common published materials, like people, events, dates or tags. Even though the content of web is fully capable of automated processing, microformats simplify the process by attaching semantics and other so lead the way for a more professional automated processing. Many advantages can be found in favor of microformats but the most crucial are these ones.

By this time i should mention that microformats are a huge relief in web scraping by defining lightweight standards for declaring info in any web page. By doing so another concept of HTML5 is defined, Microdata. This lets you define custom variables and implement certain proprieties of them.

Now that you know what microformats are we should focus on the getting started part. A really useful, quick and detailed guide can be found here, and more complex task are also available. Now, the only thing left is to wish you good luck into implementing it .

Guesstimation

Today’s post is about something we’ve been wanting to write for some time. Although it is not related to web scraping it has to do with taking a decision without needing to use a very large number of resources, having proven its efficiency in a number of cases.

Guesstimation, a concept first used in the early 30’s (not quite new as we can see) means exactly the two purposes of the two words of which is made. On the one hand we have the word Guess, denoting a not very accurate way of determining things and on the other the word Estimation, which is the process of finding an approximation which value is used for finding out a series of factors. Altogether, the word regards an estimate made without using adequate or complete information, or, more strongly, as an estimate arrived at by guesswork or conjecture.

Guesstimations in general are a very interesting subject because of the factors that led to the result. Some examples of such rather amusing results given by Sarah Croke and Robin Blume-Kohout from the Perimeter Institute for Theoretical Physics and Robert McNees from Loyola University in Chicago. When asked how much memory would a person need to store a lifetime of events the answer was simply calculated at 1 exobyte on the assumption that the human eye works just as a video camera recording everything that happens around us.

Funny or not, guesstimations began step by step to be a part of our life through rough conclusions based on economy and used by the marketers.

Web Scraping’s 2013 Review – part 2

As promised we came back with the second part of this year’s web scraping review. Today we will focus not only on events of 2013 that regarded web scraping but also Big data and what this year meant for this concept.

First of all, we could not talked about the conferences in which data mining was involved without talking about TED conferences. This year the speakers focused on the power of data analysis to help medicine and to prevent possible crises in third world countries. Regarding data mining, everyone agreed that this is one of the best ways to obtain virtual data.

Also a study by MeriTalk a government IT networking group, ordered by NetApp showed this year that companies are not prepared to receive the informational revolution. The survey found that state and local IT pros are struggling to keep up with data demands. Just 59% of state and local agencies are analyzing the data they collect and less than half are using it to make strategic decisions. State and local agencies estimate that they have just 46% of the data storage and access, 42% of the computing power, and 35% of the personnel they need to successfully leverage large data sets.

Some economists argue that it is often difficult to estimate the true value of new technologies, and that Big Data may already be delivering benefits that are uncounted in official economic statistics. Cat videos and television programs on Hulu, for example, produce pleasure for Web surfers — so shouldn’t economists find a way to value such intangible activity, whether or not it moves the needle of the gross domestic product?

We will end this article with some numbers about the sumptuous growth of data available on the internet. There were 30 billion gigabytes of video, e-mails, Web transactions and business-to-business analytics in 2005. The total is expected to reach more than 20 times that figure in 2013, with off-the-charts increases to follow in the years ahead, according to researches conducted by Cisco, so as you can see we have good premises to believe that 2014 will be at least as good as 2013.

Web Scraping’s 2013 Review – part 1

Here we are, almost having ended another year and having the chance to analyze the aspects of the Web scraping market over the last twelve months. First of all i want to underline all the buzzwords on the tech field as published in the Yahoo’s year in review article . According to Yahoo, the most searched items wore

- iPhone (including 4, 5, 5s, 5c, and 6)

- Samsung (including Galaxy, S4, S3, Note)

- Siri

- iPad Cases

- Snapchat

- Google Glass

- Apple iPad

- BlackBerry Z10

- Cloud Computing

It’s easy to see that none of this terms regards in any way with the field of data mining, and they rather focus on the gadgets and apps industry, which is just one of the ways technology can evolve to. Regarding actual data mining industry there were a lot of talks about it in this year’s MIT’s Engaging Data 2013 Conference. One of the speakers Noam Chomsky gave an acid speech relating data extraction and its connection to the Big Data phenomena that is also on everyone’s lips this year. He defined a good way to see if Big Data works by following a series of few simple factors: 1. It’s the analysis, not the raw data, that counts. 2. A picture is worth a thousand words 3. Make a big data portal (Not sure if Facebook is planning on dominating in cloud services some day) 4. Use a hybrid organizational model (We’re asleep already, soon) let’s move 5. Train employees Other interesting declaration was given by EETimes saying, “Data science will do more for medicine in the next 10 years than biological science.” which says a lot about the volume of required extracted data.

Because we want to cover as many as possible events about data mining this article will be a two parter, so don’t forget to check our blog tomorrow when the second part of this article will come up!

Can robots.txt protect website from scraping?

No. Robots.txt it’s a formal parsing guide for web crawlers (especially for search engines).

With robots.txt you can avoid to appear in unwanted page or sections in search engines, but this can’t stop bots to parse this pages.

DOM versus Regex in web scraping

In web scraping field there are two methods for data filtration. and the question is what is best?

The correct answer is, depends.

First is to use a DOM (Document Object Model) parser and second is regex matching (regex is an acronym from regular expressions). Both of them has advantages and disadvantages.

DOM Parser

| Advantages | Disadvantages |

|---|---|

| Simple to code | Use more memory |

| Sensitive at bad HTML |

Regex

| Advantages | Disadvantages |

|---|---|

| Insensitive at bad HTML | Use more CPU |

| more difficult to code |

Hello Big Data

If you are interested in the scraping business you have probably heard by now of a concept called Big Data. This is, as the name says, a collection of data that is so big and complex that it is very hard to process. Nowadays it is estimated that a normal Big Data cell would be around tens of exabytes, meaning around 10 to the power of 18 bytes, but it is estimated that until 2020 more than 18000 exabytes of data will be created.

There are many pros and cons of Big Data because, while some organisations wouldn’t know what to do with a collection of data bigger than few dozen terabytes, others wouldn’t consider analyzing data smaller than that. Another point of view, and one of the major cons that is attributed to Big Data is the fact that with such big amount of data, a correct sampling is very hard to do, and so, major errors could interrupt the analyzing process. On the other hand, Big Data provided a revolution in science and more generalist, in economy. It is enough for us to think that only in Geneva, for the Large Hadron Collider there are more than 150 million sensors, delivering data about 40 million times per second about 600 collision per second. As for the Business sector, the one that we are interested in, we can say that Amazon , handles each day queries from more than half a million third party sellers, dealing with millions of back end operations each day. Another example is that of Facebook who has to handle each day more than 50 billion photos.

Generally, there are 4 main characteristics of Big Data: First of them, and the most obvious one is the volume, of which i have already talked and said that it’s growing at an exponential rate. The second main characteristic is the speed of Big Data. This also grows in direct connection with the volume because it is expected that as the world evolve the processing units to be faster. A third category it is considered to be the variety of data. Only 20 percent of all data is structured data, and only this can be analyzed by traditional approach. The structured data is in direct connection with the fourth characteristic, the veridicity of them, which is essential for the whole process to have accurate results.

To end with I would say that even if not many have heard of it, Big Data is already a part of our lives, influencing the world we live in for many years already. This influence can only grow in the next decades until everybody will be heard of it and how decisions are made through Big Data.