Hello internet,

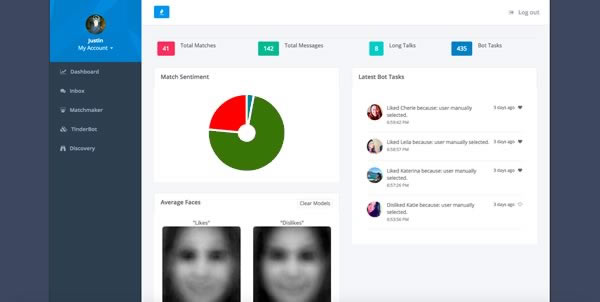

As you probably know, we deal everyday with data scraping, which is quite challenging, but, from time to time we tend to ask ourselves what else is there, and especially, can we scrap something else other than data? The answer is yes, we can, and today I am going to talk about how opinion mining can help you.

Opinion mining, better known as Sentiment analysis deals with automatically scan of a text and establishing its nature or purpose. One of the basic tasks is to determine whether the text itself is basically good or bad, like if it relates with the subject that is mentioned in the title. This is not quite easy because of the many forms a message can take.

Also the purposes that sentiment analysis can be to analyze entries and state the feelings it express (happiness, anger, sadness). This can be done by establishing a mark from -10 to +10 to each word generally associated with an emotion. The score of each word is calculated and then the score of the whole text. Also, for this technique negations must be identified for a correct analysis.

Another research direction is the subjectivity/objectivity identification. This refers to classifying a given text as being either subjective or objective, which is also a difficult job because of many difficulties that may occur (think at a objective newspaper article with a quoted declaration of somebody). The results of the estimation are also depending of people’s definition for subjectivity.

The last and the most refined type of analysis is called feature-based sentiment analysis. This deals with individual opinions of simple users extracted from text and regarding a certain product or subject. By it, one can determine if the user is happy or not.

Open source software tools deploy machine learning, statistics, and natural language processing techniques to automate sentiment analysis on large collections of texts, including web pages, online news, internet discussion groups, online reviews, web blogs, and social media. Knowledge-based systems, instead, make use of publicly available resources to extract the semantic and affective information associated with natural language concepts.

That was all about sentiment analysis that TheWebMiner is considering to implement soon. I hope you enjoyed and you learned something useful and interesting.